Diagrams AI Can, and Cannot, Generate

By now, generative artificial intelligence’s ability to create text and images is well known. Generating system architecture diagrams would seem to be a natural extension of this. In this article, we examine three use cases for AI-generated system architecture diagrams. We will evaluate AI’s ability to create generic diagrams focused on technology, whiteboard diagrams for planned or proposed future systems, and system diagrams that detail real-life, existing systems.

Generating generic AI diagrams

First, a definition: generic diagrams in this context are diagrams not associated with source code or a deployed solution, present or future. They are usually entirely decorative or explain how a technology like AWS or Kubernetes works. Since they don’t describe an actual solution, generic diagrams have a lot of leeway regarding accuracy. Any sufficiently plausible diagram is acceptable.

To start, let’s ask ChatGPT (version 4o) for something simple:

Hello. Can you generate an image of a diagram of a typical AWS serverless system?

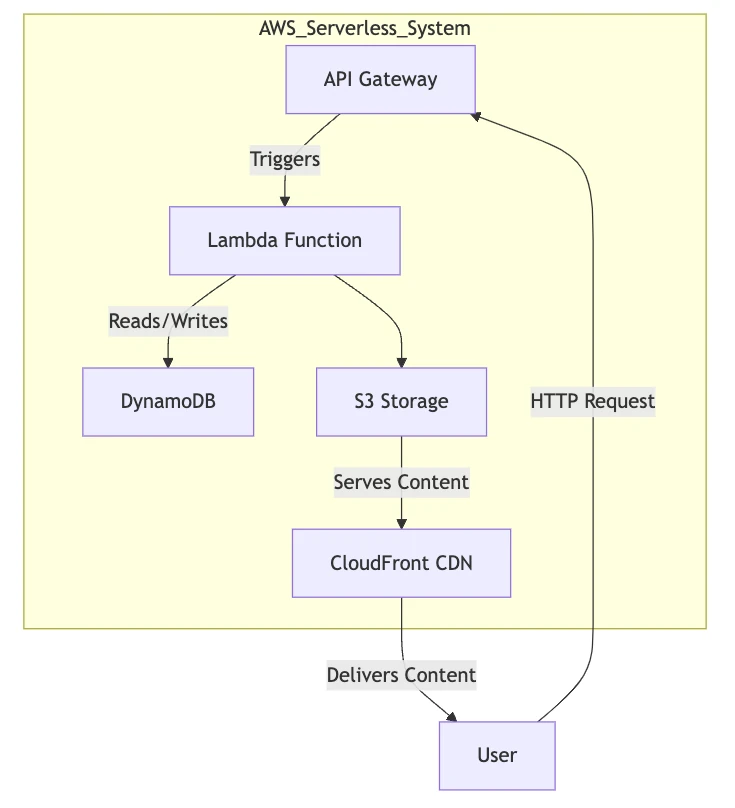

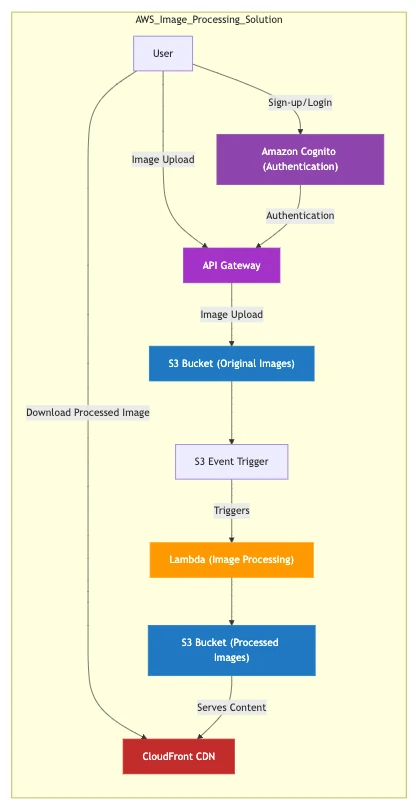

The result:

ChatGPT nails it on the first try. While not pretty, the diagram contains all the critical elements of a simple 3-tiered AWS serverless system: S3 for file storage, a DynamoDB database, Lambda for compute, and API Gateway for presentation. The AI presumed we wanted a web app and included a CDN.

ChatGPT’s result is impressive, though, in practice, it isn’t better than an image search for the same thing. Most would prefer the latter based on variety and aesthetics alone.

Regardless of where they come from, generic diagrams are of little value. Paying even a dime a dozen would be a bad deal. So, let’s move on to the more interesting case of whiteboarding.

Whiteboarding with AI

Whiteboarding is the act of diagramming a proposed future system with well-defined functionality. The purpose is to identify problems and explore potential solutions. Whiteboard diagrams are more detailed than generic diagrams (see above) but less detailed than system diagrams, which we will examine in the next session.

To get such a diagram, we naturally prompt ChatGPT with more detail about the proposed system’s goals:

Please generate a mermaid diagram of a browser-based image processing and storage solution using a serverless AWS pattern. It should handle user sign-up and authentication, allow users to upload and process images, and download the results. It should also allow users to store both the original and processed images. Assume AWS lambda handles all image processing with libraries to be determined.

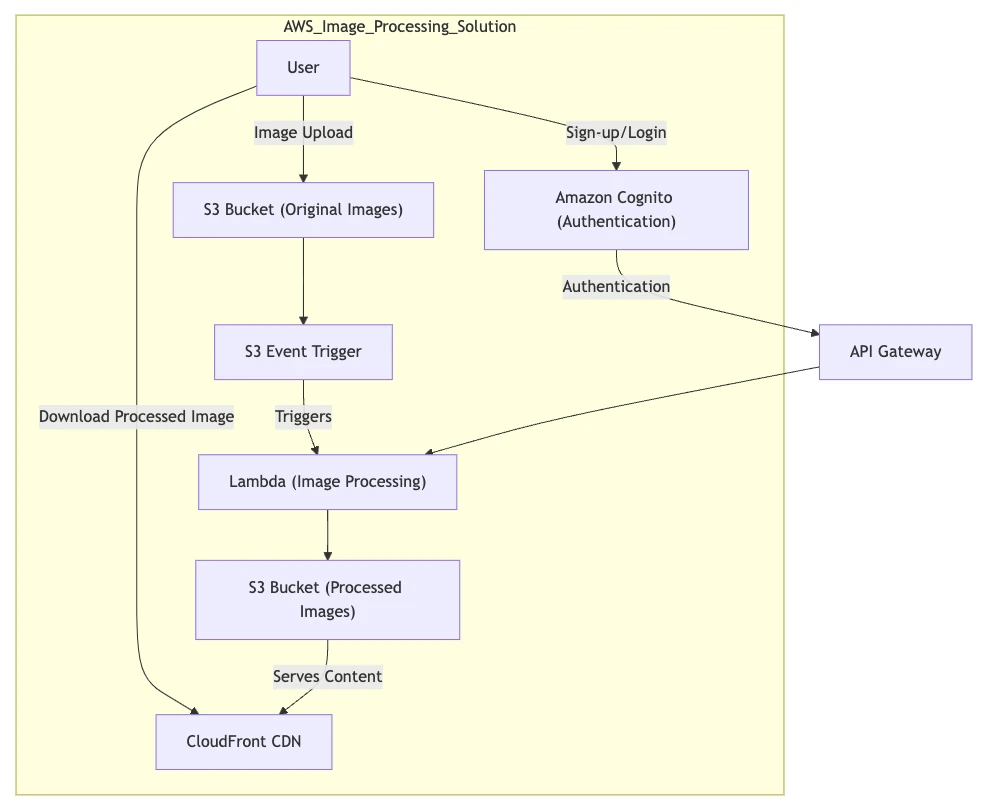

This result is a good start. The key serverless components are again present, and this time, some of them are named according to their purpose: Original Images and Processed Images (S3 buckets), and Image Processing (Lambda function).

There are also a few issues:

- User should be outside the solution box, and API Gateway should be in it.

- There should be a link from User to API Gateway; otherwise the gateway serves no purpose.

- This flow chart mixes authentication, upload, and download into a single flow. It would be clearer if these were separate perspectives.

Let’s fix with the prompt:

Let’s start with some cleanup. Can you move the “User” element outside of the “AWS_Image_Processing_Solution” box and move the “API Gateway” element inside of it?

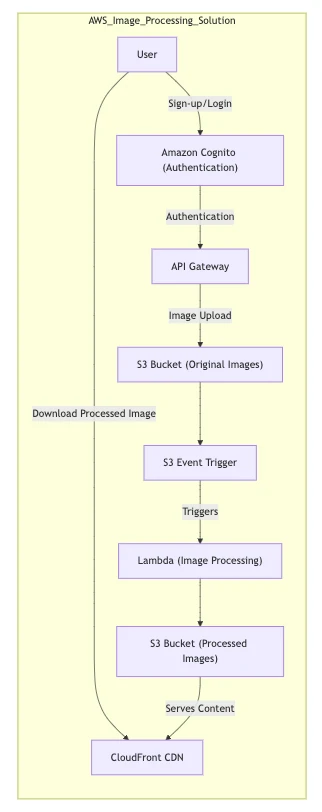

User is still inside the box, but it is a step in the right direction. Let’s refine further:

Please add an arrow from the “User” element to the “API Gateway” element that is labeled “Image Upload.” Also, is it possible to add AWS icons and use AWS colors for the elements?

ChatGPT helpfully replied that Mermaid doesn’t natively support icons in nodes and suggested some alternative tools. It did add colors, however:

It’s unfortunate that there is no icon support, and the color branding isn’t quite correct, but it looks better in any case.

Moving on, can the diagram be improved structurally? Right now, it is a flowchart showing three flows in one. This incorrectly implies that (for example) signing up and logging in could trigger image processing downstream. Let’s fix:

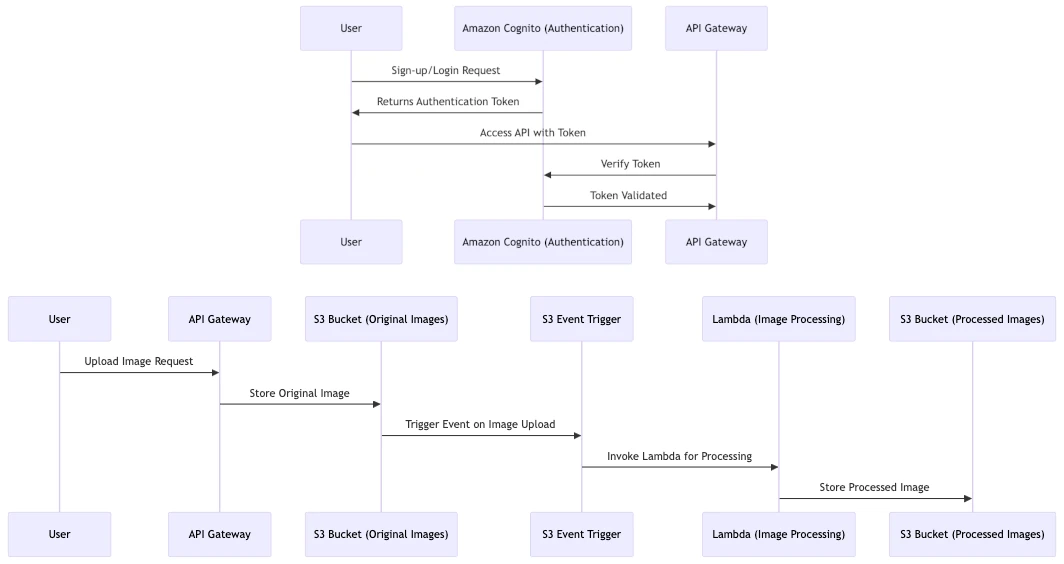

Instead of a flowchart, can this be represented as one or more sequence diagrams?

Not too bad! It correctly split up the flows (though it is a shame the colors are gone). Let’s fix up some things:

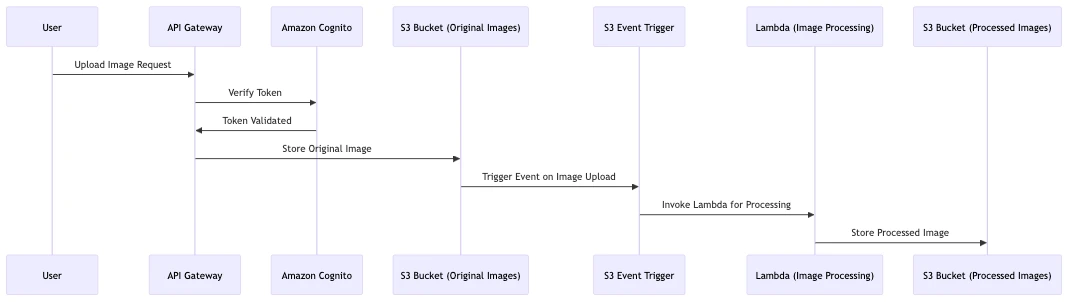

Can you move “Access API with Token”, “Verify Token”, and “Token Validated” steps to the second sequence diagram? They should occur between “Upload Image Request” and “Store Original Image”

Very nice; it works as requested.

Whiteboarding with ChatGPT is clearly feasible, at least with small projects. It provided an excellent initial diagram and readily accepted refinements. The only significant blind spot was with icons, which is more of a shortcoming with Mermaid.

That said, AI-assisted whiteboarding provides fewer benefits than meets the eye. Hand-holding the AI with refinements is time-consuming and potentially expensive. The alternative, directly using a diagrams-as-code tool (like Mermaid or Ilograph), provides more control with fewer keystrokes. These alternatives come at the cost of learning a DSL (domain-specific language). Users should try both themselves to gauge which they prefer.

System diagramming with AI

We’ll significantly increase the difficulty by asking ChatGPT to diagram a real-life, deployable system. In the last section, we asked it to diagram a system described in prose. Now, we’ll ask it to diagram a system from source code.

Diagramming a real-life system is much more challenging but also much more valuable, whether AI is used or not. Diagrams of real-life systems serve as visual documentation; they are helpful for onboarding, knowledge refreshment, alignment, and incident response. Unlike when whiteboarding, the details are real, and they matter. Hallucinations are unacceptable.

Instead of a detailed prompt, we ask ChatGPT to diagram a source repository we upload (it could not analyze the link directly). In theory, all the information needed to diagram the system thoroughly is in the repo.

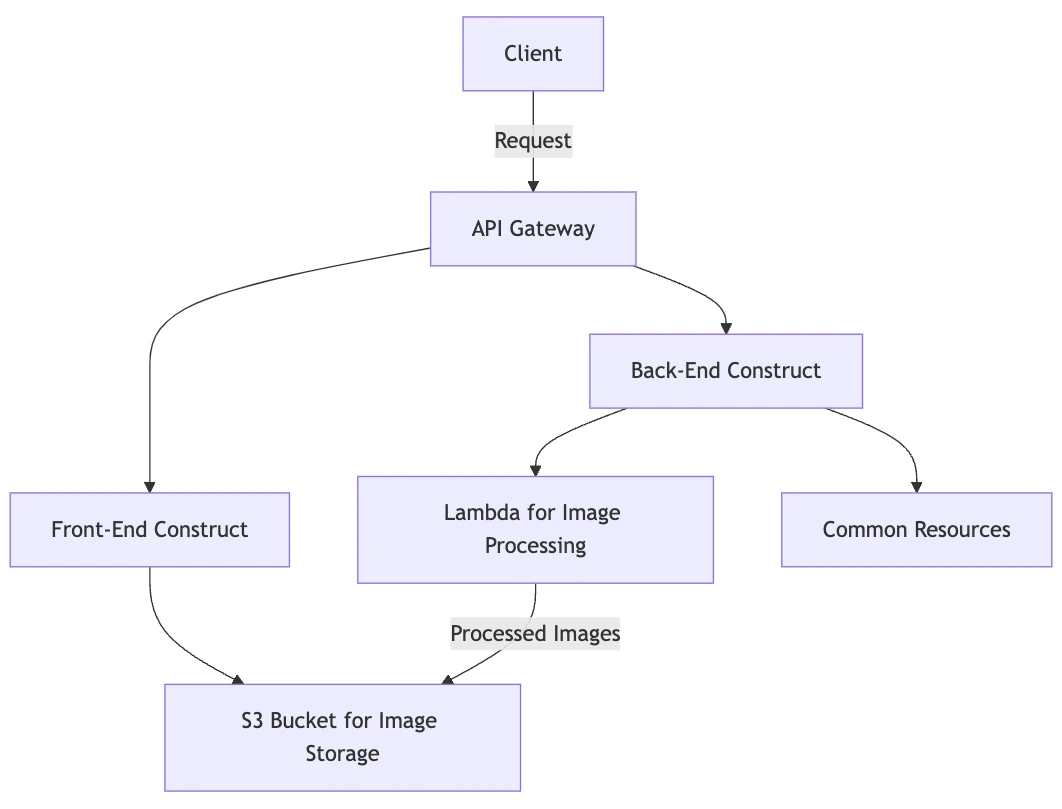

I’d like to switch gears. Instead of creating a diagram of the system I describe, I’d like you to generate one from the attached source code. While a diagram is embedded in the readme, I want you to ignore it and focus on the CDK constructs and other code in the “source” directory. Please output in mermaid format.

The result above is lackluster. It has serious deficiencies:

- The solution is there in only the broadest strokes.

- There is no detail on the constructs, which the prompt explicitly asked for.

- It is unclear what “common resources” means.

This result is even less valuable than the generic diagrams we started with. When asked, ChatGPT refused to detail it further.

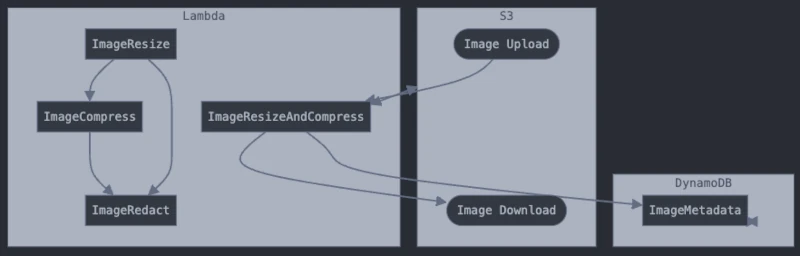

Perhaps we got unlucky, or ChatGPT isn’t well-suited to the task. The result from Claud.ai, when given the same prompt, was not much better, however:

Like ChatGPT’s diagram, Claud.ai’s is vague and inaccurate. It understands that the solution resizes and redacts images, but it doesn’t show this is done with AWS Rekognition. It hallucinates image compression and the use of DynamoDB, neither of which are part of the solution. The diagram’s arrows are also unlabeled and, in some cases, misrendered.

Both AI systems failed rather spectacularly. While we could iterate on both diagrams, this defeats the purpose of having the AI generate diagrams from source code.

The Challenges of Automated Diagram Generation

Generating diagrams for source code is a challenging problem, and it’s clear that AI has a ways to go. There are at least three significant challenges to overcome:

Almost no training data

Detailed diagrams of deployable systems are practically nonexistent online. Almost all public repos are code libraries meant to be a part of systems, not systems that can be deployed independently. Worse still, practically none of those have diagrams. The diagrams found online are almost exclusively the kind of “generic” diagrams discussed in the first section of this article.

Repositories for deployable systems are mainly in the hands of private companies, with little incentive to release their internal code or diagrams. AIs like ChatGPT and Claude have seen almost no examples of these diagrams; they are flying blind.

Code analysis

Rather than being a purely “generative” exercise, the AI tools must analyze system code. This input is often verbose, making analysis expensive and error-prone. The density and complexity of source code also create a host of challenges:

- It often contains many languages (config, HTML, programming, bash scripts, etc.), which the AI must understand.

- Configuration adds lots of indirection; the AI must negotiate how variables defined at deploy time are used at run time. Relationships between, for example, compute resources and database resources can be gleaned only with a careful understanding of both code and config.

- When diagramming execution sequences, code can have many branching paths. Most fall outside the “happy path” that diagrams should focus on, and finding that “happy path” is not easy.

Code doesn’t describe strategy

Thoroughly diagramming a system requires understanding its purpose first and foremost. The diagram author must know this to selectively include and highlight the most critical resources, relations, and interactions (preferably using multiple perspectives). Without this knowledge, there is no hope of creating informative, coherent diagrams.

This intent will not be in the code repository unless documented in its readme. Worse, the AI cannot know its intent if a deployed solution has drastically different behavior depending on its configuration.

Conclusion

AI can help generate diagrams in a fairly narrow case: whiteboarding new systems. However, creating system diagrams with AI is simply not there yet. The dream of auto-updating diagrams to match source code remains elusive.

AI is, and probably forever will be, no substitute for human investigation and human learning. As commonly noted, if you want to learn something thoroughly, try to teach it to others. System diagramming is no exception: one of the best ways to learn an unfamiliar system is to try to diagram it. You will not only learn by creating diagrams, but the next person will learn from your diagrams.

Questions or comments? Please reach out to me on LinkedIn or by email at billy@ilograph.com.